Lambda Response Streaming allows sending incremental responses, but those response chunks can arrive long after you write them. They are buffered in a rather unexpected way, delaying all messages you stream. But there is a workaround to force the stream to flush.

TL;DR Lambda does not send small payloads immediately, instead waiting even a few seconds until you write more. The trick is to make each chunk at least 100 KB, filled with whitespace for example.

Enabling Lambda Response Streaming

Follow the docs on how to use Response Streaming in Lambda. Here is a summary of steps:

- create Node.js Lambda function (this is the only supported managed runtime),

- create Function URL,

- set Function URL invoke mode to

RESPONSE_STREAM, - wrap your

handlerfunction inawslambda.streamifyResponse()which is provided globally (using TypeScript? here are the typings).

Lambda Response Streaming does not work as you expect

If you copy and run the Lambda Response Streaming example from the AWS documentation, it does not work as you may expect.

exports.handler = awslambda.streamifyResponse(

async (event, responseStream, _context) => {

// Metadata is a JSON serializable JS object.

Its shape is not defined here.

const metadata = {

statusCode: 200,

headers: {

"Content-Type": "application/json",

"CustomHeader": "outerspace"

}

};

// Assign to the responseStream parameter to prevent accidental reuse of the non-wrapped stream.

responseStream = awslambda.HttpResponseStream.from(responseStream, metadata);

responseStream.write("Streaming with Helper \n");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("Hello 0 \n");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("Hello 1 \n");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("Hello 3 \n");

await new Promise(r => setTimeout(r, 1000));

responseStream.end();

await responseStream.finished();

}

);

You could think you will receive the “Streaming with Helper” text almost immediately and then subsequent messages every 1 second.

But that’s not what happens.

Instead, you get the first chunk of text after 2 seconds, with the first two lines together. Then, after 1 second, you get the third line, and finally, the last line after another second:

The moment you get the first chunk is also visible in the request timing in Chrome dev tools:

And no, it’s not a cold start. The Lambda was warmed before.

What is Lambda Response Streaming, again?

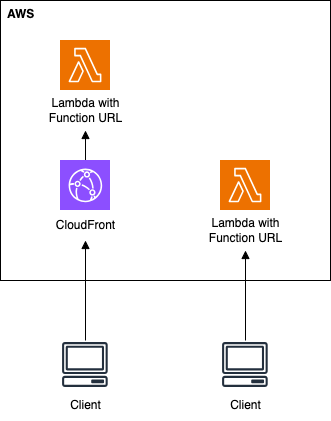

Earlier this year, AWS Lambda got support for Response Streaming. Instead of returning the whole response at the end of execution, you can now progressively send next chunks of data. You can use it only with Function URLs (dedicated HTTP endpoints for Lambda function), not API Gateway. The client accesses it directly by the Function URL or through the CloudFront proxy (which can provide a nice common domain name for all functions, among other things):

There are several use cases described in the announcement blog post. One is streaming partially generated data as soon as it’s available to improve performance and reduce client wait time. The other is streaming large payloads, like images, videos, documents, or database query results, without buffering it first into memory and with a higher total response payload size than the regular 6 MB.

The third mentioned use case is reporting incremental progress from long-running operations. But as you just saw, it’s not necessarily behaving as expected.

Timing the request

What exactly happens? When next chunks of text are actually sent? Let’s measure it.

First, I need to know the rough difference between my local time and the server clock (yes, Lambda is running on some server 🤯). Let’s do this with this very clunky code:

export const handler = async (event) => {

const serverTime = new Date();

const html = `

Server time: ${serverTime.toISOString()}<br>

<script>

const clientTime = new Date();

document.write("Client time: " + clientTime.toISOString() + "<br>");

document.write("Difference (ms): " + (clientTime.getTime() - ${serverTime.getTime()}));

</script>

`;

return {

"statusCode": 200,

"headers": {

"Content-Type": "text/html",

},

"body": html,

};

};

I’ve set up this Lambda with Function URL but in a BUFFERED invoke mode.

It’s the default mode, in which Lambda returns the payload at the end of the invocation.

Opening the URL in a browser, I get this:

Server time: 2023-09-13T17:59:30.337Z

Client time: 2023-09-13T17:59:30.256Z

Difference (ms): -81

Running it multiple times, I get a time difference from -50 to -85 ms. Yes, minus. The server’s clock is ahead of my local time.

The exact time difference can vary between Lambda execution environments (if they run on different servers) and exact network latency. However, I only need a rough value to know how much the clock difference affects further measurements.

Having this, I can measure the differences between when chunks are produced and when I receive them in the browser. With another clumsy code:

export const handler = awslambda.streamifyResponse(

async (event, responseStream) => {

responseStream.setContentType("text/html");

responseStream.write(generateChunk(1));

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(2));

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(3));

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(4));

responseStream.end();

}

);

const generateChunk = (n) => {

const serverTime = new Date();

return `

Chunk: ${n}<br>

Server time: ${serverTime.toISOString()}<br>

<script>

const clientTime${n} = new Date();

document.write("Client time: " + clientTime${n}.toISOString() + "<br>");

document.write("Difference (ms): " + (clientTime${n}.getTime() - ${serverTime.getTime()}) + "<br><br>");

</script>

`;

}

And here is the output:

Chunk: 1

Server time: 2023-09-13T18:05:41.533Z

Client time: 2023-09-13T18:05:43.454Z

Difference (ms): 1921

Chunk: 2

Server time: 2023-09-13T18:05:42.534Z

Client time: 2023-09-13T18:05:43.454Z

Difference (ms): 920

Chunk: 3

Server time: 2023-09-13T18:05:43.536Z

Client time: 2023-09-13T18:05:44.449Z

Difference (ms): 913

Chunk: 4

Server time: 2023-09-13T18:05:44.537Z

Client time: 2023-09-13T18:05:44.449Z

Difference (ms): -88

Interestingly, in this case, I got the response only in two parts: chunks 1 and 2, and then chunks 3 and 4 together. And, accounting for the ~80 ms time difference, we see that the first chunk was sent with a 2-second delay, two next with a 1-second delay, and only the last one was sent straight away.

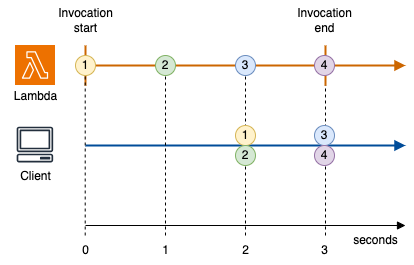

Let’s visualize this:

As you can see, Lambda does not immediately send the next chunks. Instead, they are buffered, and sending the next chunk is what “pushes” them out.

Why is that?

🤷♂️

It’s not the browser’s fault. Accessing the URL using cURL with a –no-buffer flag shows the same behavior. Despite no client-side JavaScript executed, you can see the response is sent with a delay and in only two parts.

The best explanation I can think of is that it’s some form of internal Lambda optimization against sending too many small chunks. I suspect this is intended, not a bug.

However, the fact that a new chunk “pushes out” the previous one but itself is not sent is unexpected.

But I’m very far from being an expert in networking (both in terms of computer connectivity and making business relationships, although only the first one is applicable here).

Forcing Lambda Response Streaming flush

So the question is: can we force Lambda to send our data immediately? To flush the response stream buffer?

Yes. And as with many things, it’s a workaround/hack.

Node.js does not provide any “flush” method on the stream.Writable

(this is what responseStream is). The closest are writable.cork() and writable.uncork(), preventing the immediate sending of the stream content to the target.

Quite the opposite of what we need, and calling those methods does not force the response to be sent to the client.

But you see, Lambda response streams send big chunks immediately. So, if we make our chunks larger, they will be sent right away. With HTML content, it’s simple – you can just add whitespaces:

const bigStr = " ".repeat(100_000); // generate 100 KB string

export const handler = awslambda.streamifyResponse(

async (event, responseStream) => {

responseStream.setContentType("text/html");

responseStream.write(generateChunk(1) + bigStr); // add it

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(2) + bigStr); // to

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(3) + bigStr); // every

await new Promise(r => setTimeout(r, 1000));

responseStream.write(generateChunk(4) + bigStr); // chunk

responseStream.end();

}

);

const generateChunk = (n) => {

const serverTime = new Date();

return `

Chunk: ${n}<br>

Server time: ${serverTime.toISOString()}<br>

<script>

const clientTime${n} = new Date();

document.write("Client time: " + clientTime${n}.toISOString() + "<br>");

document.write("Difference (ms): " + (clientTime${n}.getTime() - ${serverTime.getTime()}) + "<br><br>");

</script>

`;

}

And in the response we get:

Chunk: 1

Server time: 2023-09-13T18:44:35.302Z

Client time: 2023-09-13T18:44:35.290Z

Difference (ms): -12

Chunk: 2

Server time: 2023-09-13T18:44:36.303Z

Client time: 2023-09-13T18:44:36.214Z

Difference (ms): -89

Chunk: 3

Server time: 2023-09-13T18:44:37.305Z

Client time: 2023-09-13T18:44:37.199Z

Difference (ms): -106

Chunk: 4

Server time: 2023-09-13T18:44:38.305Z

Client time: 2023-09-13T18:44:38.291Z

Difference (ms): -14

With the first chunk send immediately:

I found the value of 100 KB (or 100 000 spaces) experimentally, incrementing the number of spaces by an order of magnitude. 10 KB was too small to force flush.

Should you do this?

For most use cases of Lambda Response Streaming, chances are the output is already quite large, so chunks will be sent quickly.

And there are probably no sleep() functions in the middle.

But if you are using Response Streaming for something more fancy, like dynamically displaying the progress of some process, and you send small payloads you want to display immediately, then yes, it’s an option.

Bonus – progress streaming example

The last ugly snippet for today – streaming the progress bar example:

export const handler = awslambda.streamifyResponse(

async (event, responseStream) => {

responseStream.setContentType("text/html");

responseStream.write('<meta charset="UTF-8">');

responseStream.write("<style>body { font-family: monospace; }</style>");

responseStream.write("Progress:");

responseStream.write(`<div id="progress-bar">${generateProgressBar(0)}</div>`);

responseStream.write(bigStr);

let percent = 0;

do {

percent += getRandomInt(10, 20);

percent = Math.min(percent, 100);

await new Promise(r => setTimeout(r, 1000));

const bar = generateProgressBar(percent);

responseStream.write(`<script>document.getElementById("progress-bar").innerHTML = "${bar}"</script>` + bigStr);

} while (percent < 100);

responseStream.end();

}

);

const bigStr = " ".repeat(100_000);

const getRandomInt = (min, max) => {

return Math.floor(Math.random() * (max - min + 1)) + min;

};

const generateProgressBar = (percent) => {

let bar = "[";

for (let n = 0; n < 20; n++) {

if (percent < (n + 1) * 5) {

bar += "▱";

}

else {

bar += "▰";

}

}

bar += `] ${percent}%`;

return bar;

};

Demo:

Generating client JavaScript scripts inline as text is a simple recipe for catastrophe for anything more complex. But put a templating framework on top, and you can do impressive, dynamic things, all backend-driven, no AJAX, no WebSockets.

More on Lambda Response Streaming

This post is a record of my investigation into streaming small response chunks, driven, as you can see, by crappy JS. But after figuring that out, my next question was: what’s the best way to work with Lambda Response Streams for anything more complex than pipelining output from one stream to another? That’s what the next post will be about. There will be TypeScript. And there will be generator functions!